Key Points:

- Security and privacy concerns: Increased use of AI systems raises issues like data manipulation, model vulnerabilities, and information leaks.

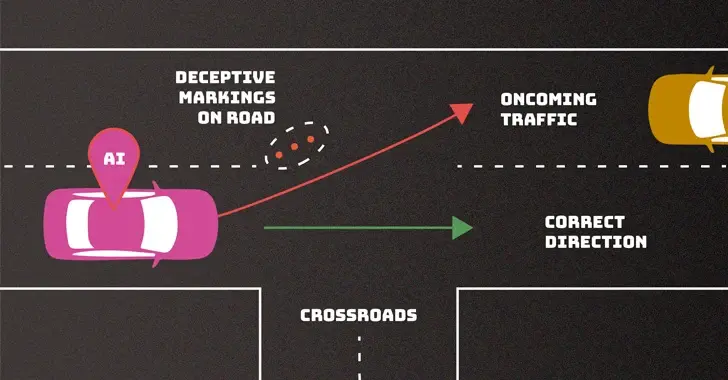

- Threats at various stages: Training data, software, and deployment are all vulnerable to attacks like poisoning, data breaches, and prompt injection.

- Attacks with broad impact: Availability, integrity, and privacy can all be compromised by evasion, poisoning, privacy, and abuse attacks.

- Attacker knowledge varies: Threats can be carried out by actors with full, partial, or minimal knowledge of the AI system.

- Mitigation challenges: Robust defenses are currently lacking, and the tech community needs to prioritize their development.

- Global concern: NIST’s warning echoes recent international guidelines emphasizing secure AI development.

Overall:

NIST identifies serious security and privacy risks associated with the rapid deployment of AI systems, urging the tech industry to develop better defenses and implement secure development practices.

Comment:

From the look of things, it looks like it’s going to get worse before it gets better.

Reminds me when people in san francisco, USA started putting cones on top of autonomous taxis and they wouldn’t move.