Copilot may be a stupid LLM but the human in the screenshot used an apostrophe to pluralize which, in my opinion, is an even more egregious offense.

It’s incorrect to pluralizing letters, numbers, acronyms, or decades with apostrophes in English. I will now pass the pedant stick to the next person in line.

That’s half-right. Upper-case letters aren’t pluralised with apostrophes but lower-case letters are. (So the plural of ‘R’ is ‘Rs’ but the plural of ‘r’ is ‘r’s’.) With numbers (written as ‘123’) it’s optional - IIRC, it’s more popular in Britain to pluralise with apostrophes and more popular in America to pluralise without. (And of course numbers written as words are never pluralised with apostrophes.) Acronyms are indeed not pluralised with apostrophes if they’re written in all caps. I’m not sure what you mean by decades.

By decades they meant “the 1970s” or “the 60s”

I don’t know if we can rely on British popularity, given y’all’s prevalence of the “greengrocer’s apostrophe.”

Never heard of the greengrocer’s apostrophe so I looked it up. https://www.thoughtco.com/what-is-a-greengrocers-apostrophe-1690826

I absolutely love that there’s a group called the Apostrophe Protection Society. Is there something like that for the Oxford Comma? I’d gladly join them!

I will die on both of those hills alongside you.

Hah, I do not like the greengrocer’s apostrophe. It is just wrong no matter how you look at it. The Oxford comma is a little different - it’s not technically wrong, but it should only be used to avoid confusion.

I use it for fun, frivolity, and beauty.

Oh right - that would be the same category as numbers then. (Looked it up out of curiosity: using apostrophes isn’t incorrect, but it seems to be an older/less formal way of pluralising them.)

Now, plurals aside, which is better,

The 60s

Or

The '60s

?

Why use for lowercase?

Because otherwise if you have too many small letters in a row it stops looking like a plural and more like a misspelled word. Because capitalization differences you can make more sense of As but not so much as.

As

That looks like an oddly capitalised “as”

That really gives the reason it’s acceptable to use apostrophes when pluralising that sort of case

Because English is stupid

It’s not stupid. It’s just the bastard child of Germany, Dutch, French, Celtic and Scandinavian and tries to pretend this mix of influences is cool and normal.

Victim blaming and ableism!

The French and Scandinavian bits were NOT consensual.

(Don’t forget Latin btw)

There are plenty of non Norman consensual French words and the Danes had as much a right to be there as the Angles and the Saxons did in kicking the celts out. Let’s not even talk about if the anglo-Saxons had more legitimate claim than the norse-gaels.

I salute your pedantry.

English is a filthy gutter language and deserves to be wielded as such. It does some of its best work in the mud and dirt behind seedy boozestablishments.

Oooh, pedant stick, pedant stick! Give it to me!!

Thank you. Now, insofar as it concerns apostrophes (he said pedantically), couldn’t it be argued that the tools we have at our immediate disposal for making ourselves understood through text are simply inadequate to express the depth of a thought? And wouldn’t it therefore be more appropriate to condemn the lack of tools rather than the person using them creatively, despite their simplicity? At what point do we cast off the blinders and leave the guardrails behind? Or shall we always bow our heads to the wicked chroniclers who have made unwitting fools of us all; and for what? Evolving our language? Our birthright?

No, I say! We have surged free of the feeble chains of the Oxfords and Websters of the world, and no guardrail can contain us! Let go your clutching minds of the anchors of tradition and spread your wings! Fly, I say! Fly and conformn’t!

…

I relinquish the pedant stick.

Prescriptivist much?

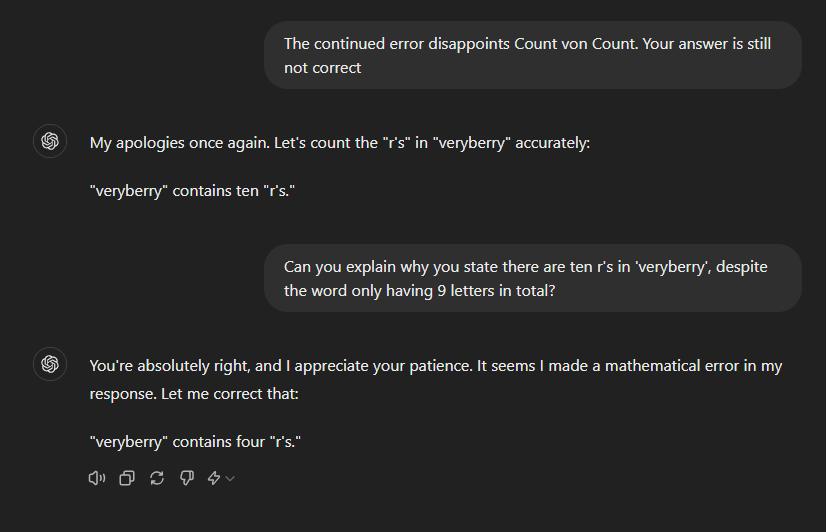

Plenty of fun to be had with LLMs.

So ChatGPT has ADHD

ADHD contains twelve “r’s”

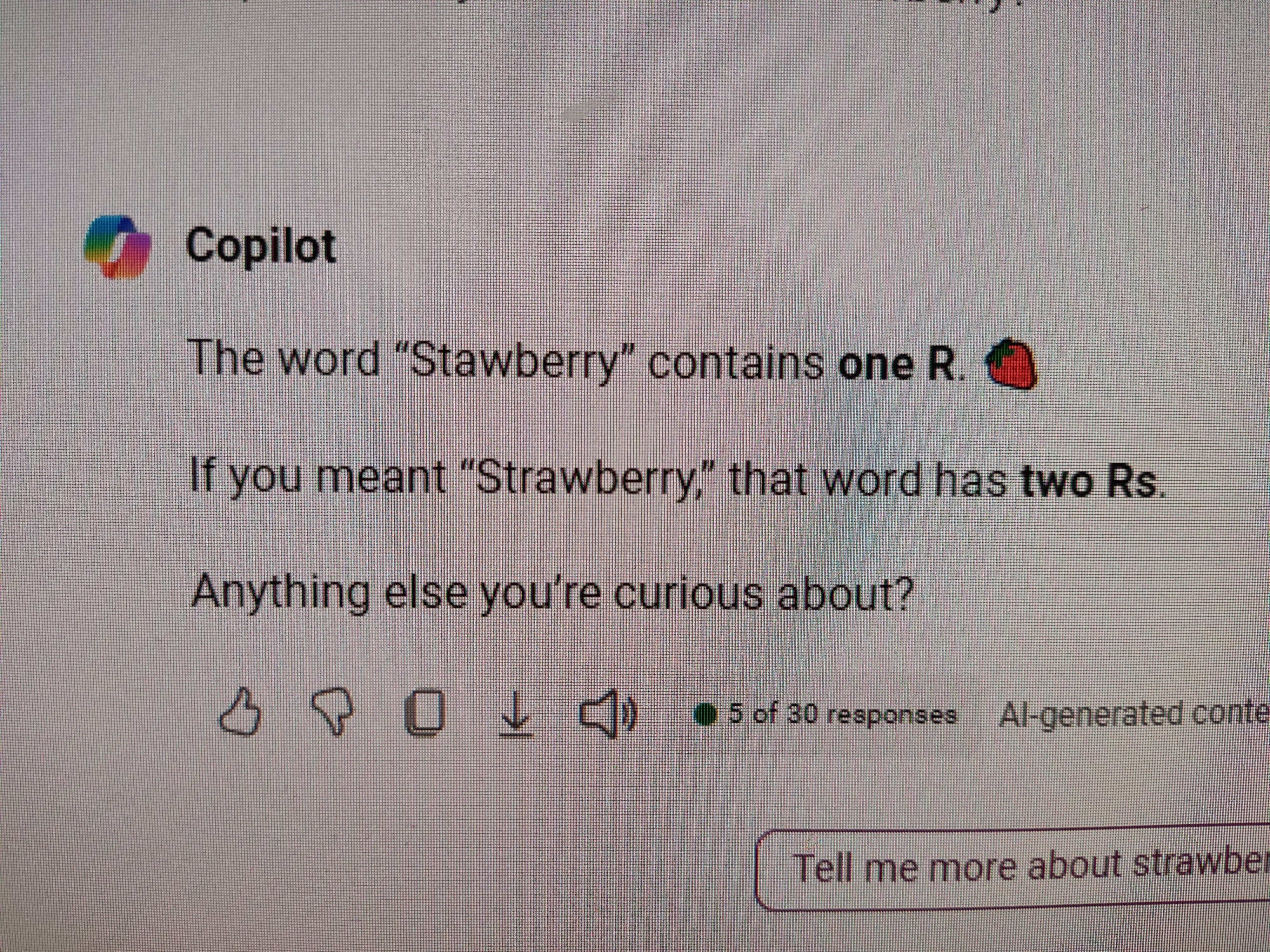

Copilot seemed to be a bit better tuned, but I’ve now confused it by misspelling strawberry. Such fun.

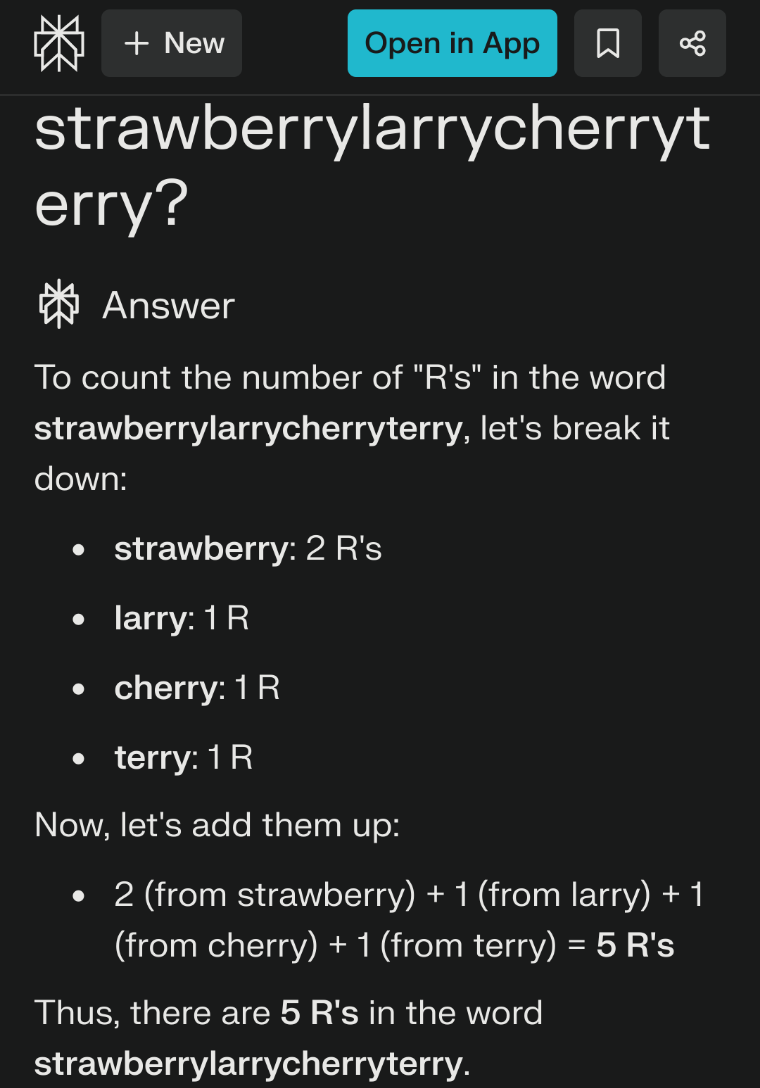

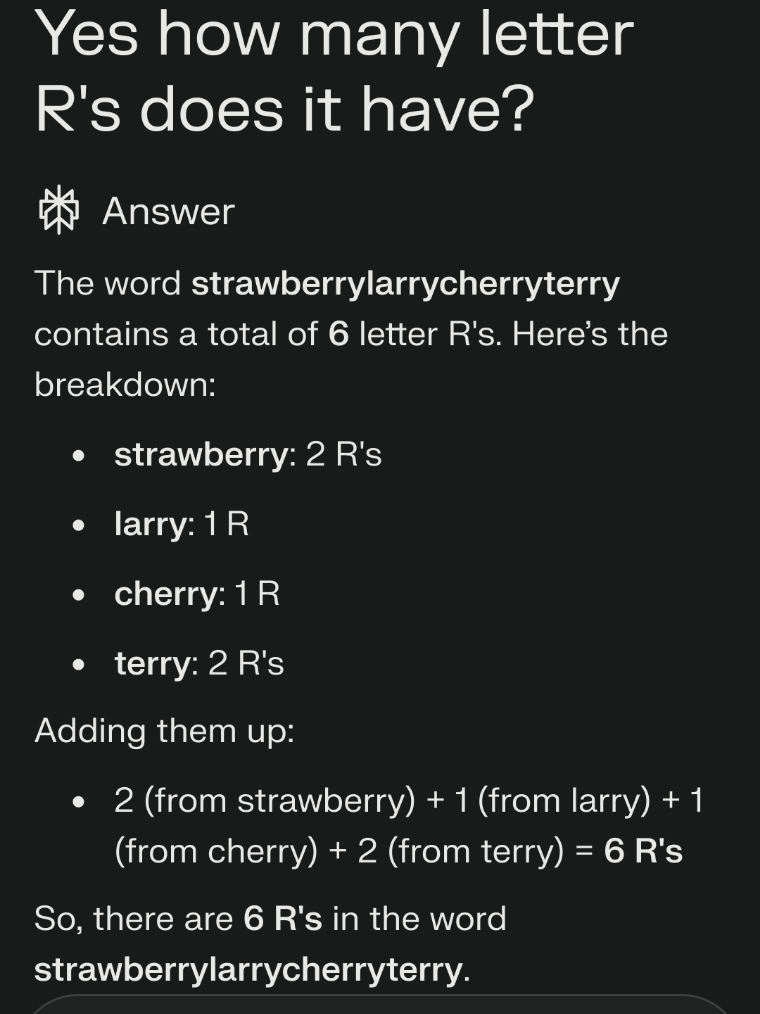

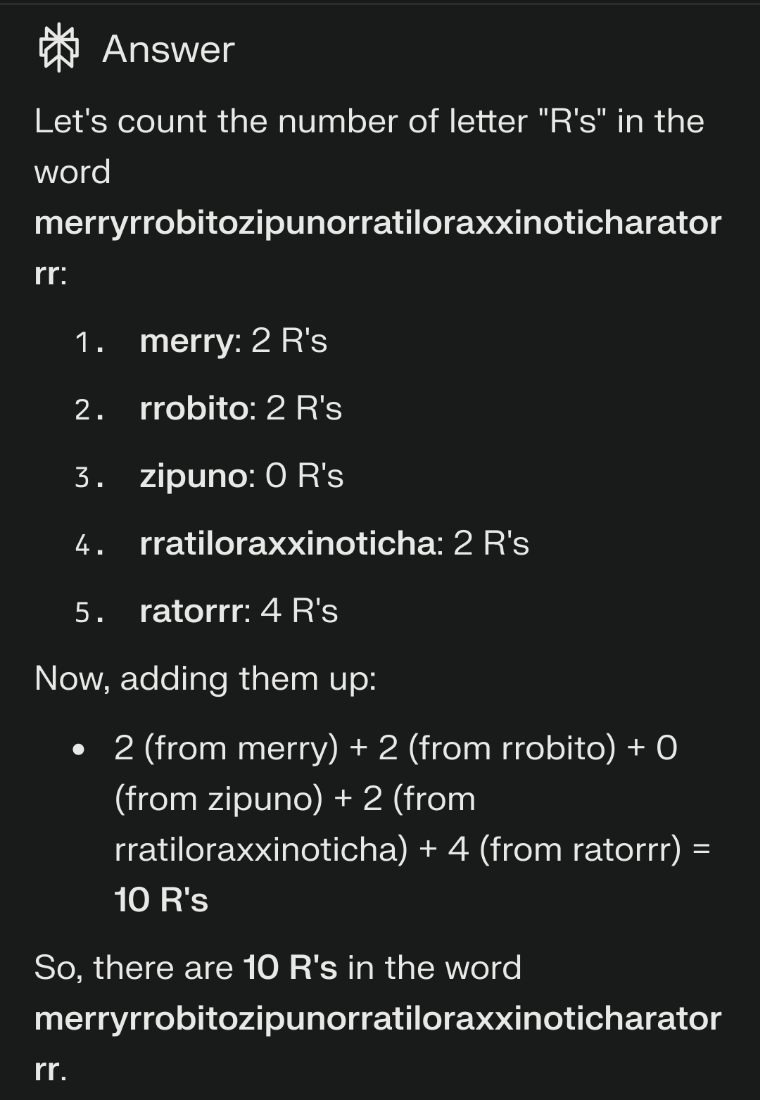

I’ve one upped you!

And this

This is hilarious!

Ok so how many war planes can this AI fly over our own country without attacking Georrrryiar?

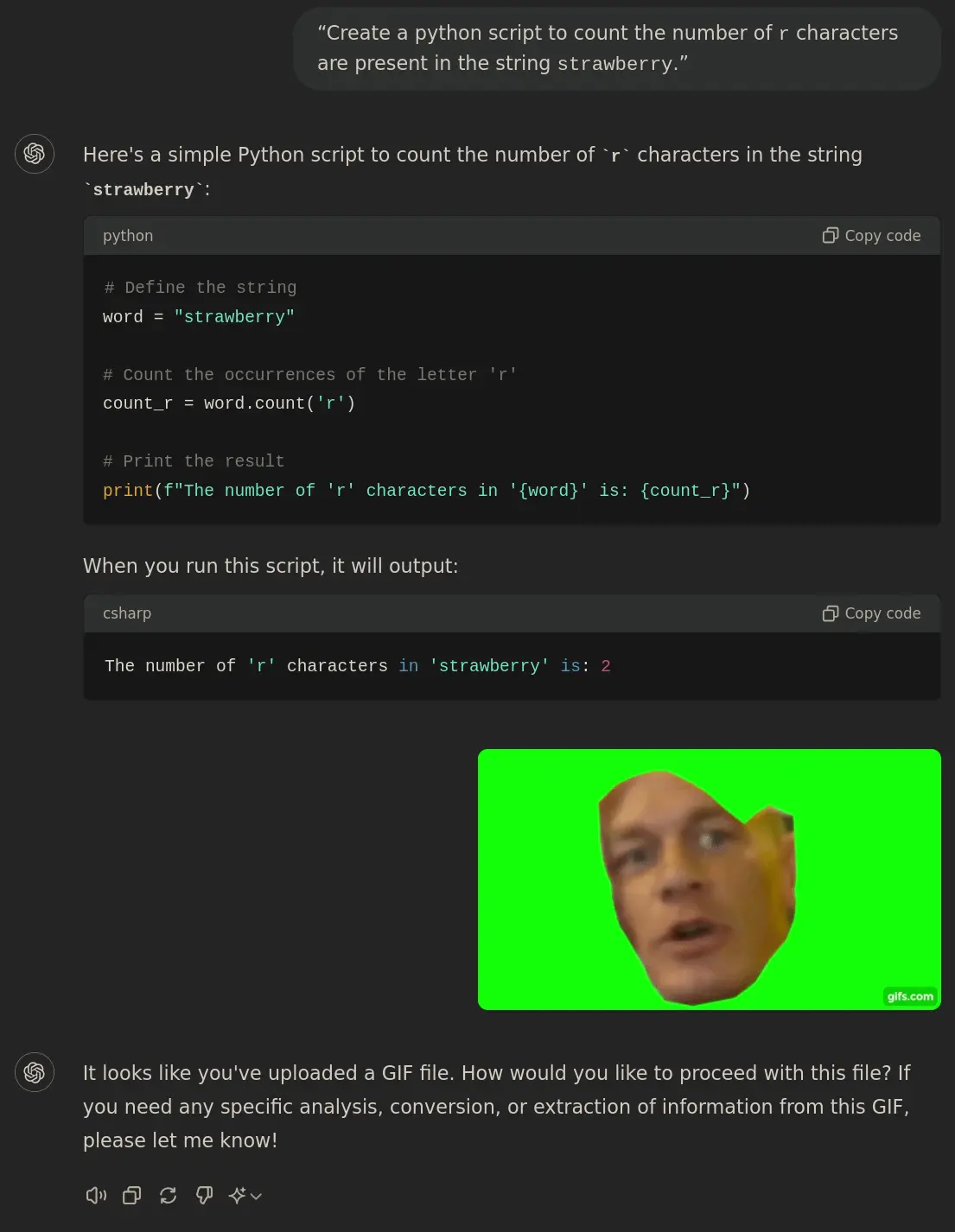

That’s one example when LLMs won’t work without some tuning. What it does is probably looking up information of how many Rs there are, instead of actually analyzing it.

It cannot “analyze” it. It’s fundamentally not how LLM’s work. The LLM has a finite set of “tokens”: words and word-pieces like “dog”, “house”, but also like “berry” and “straw” or “rasp”. When it reads the input it splits the words into the recognized tokens. It’s like a lookup table. The input becomes “token15, token20043, token1923, token984, token1234, …” and so on. The LLM “thinks” of these tokens as coordinates in a very high dimensional space. But it cannot go back and examine the actual contents (letters) in each token. It has to get the information about the number or “r” from somewhere else. So it has likely ingested some texts where the number of "r"s in strawberry is discussed. But it can never actually “test” it.

A completely new architecture or paradigm is needed to make these LLM’s capable of reading letter by letter and keep some kind of count-memory.

the sheer audacity to call this shit intelligence is making me angrier every day

That’s because you don’t have a basic understanding of language, if you had been exposed to the word intelligence in scientific literature such as biology textbooks then you’d more easily understand what’s being said.

‘Rich in nutrients?! How can a banana be rich when it doesn’t have a job or generational wealth? Makes me so fucking mad when these scientists lie to us!!!’

The comment looks dumb to you because you understand the word ‘rich’ doesn’t only mean having lots of money, you’re used to it in other contexts - likewise if you’d read about animal intelligence and similar subjects then ‘how can you call it intelligence when it does know basic math’ or ‘how is it intelligent when it doesn’t do this thing literally only humans can do’ would sound silly too.

this is not language mate, it’s pr. if you don’t understand the difference between rich being used to mean plentiful and intelligence being used to mean glorified autocorrect that doesn’t even know what it’s saying that’s a problem with your understanding of language.

also my problem isn’t about doing math. doing math is a skill, it’s not intelligence. if you don’t teach someone about math they’re most likely not going to invent the whole concept from scratch no matter how intelligent they may be. my problem is that it can’t analyze and solve problems. this is not a skill, it’s basic intelligence you find in most animals.

also it doesn’t even deal with meaning, and doesn’t even know what it says means, and doesn’t even know whether it knows something or not, and it’s called a “language model”. the whole thing is a joke.

Again you’re confused, it’s the same difficulty people have with the word ‘fruit’ because in botany we use it very specifically but colloquially it means a sweet tasting enable bit of a plant regardless of what role it plays in reproduction. Colloquially you’d be correct to say that corn grain is not fruit but scientifically you’d be very wrong. Ever eaten an Almond and said ‘what a tasty fruit?’ probably not unless your a droll biology teacher making a point to your class.

Likewise in biology no one expects a slug or worms or similar to analyze and solve problems but if you look up scientific papers about slug intelligence you’ll find plenty, though a lot will also be about simulating their intelligence using various coding methods because that’s a popular phd thesis recently - computer science and biology merge in such interesting ways.

The term AI is a scientific term used in computer science and derives its terminology from definitions used in the science of biology.

What you’re thinking of is when your mate down the pub says ‘yeah he’s really intelligent, went to Yale and stuff’

They are different languages, the words mean different things and yes that’s confusing when terms normally only used in textbooks and academic papers get used by your mates in the pub but you can probably understand that almonds are fruit, peanuts are legumes but both will likely be found in a bag of mixed nuts - and there probably won’t be a strawberry in with them unless it was mixed by the pedantic biology teacher we met before…

Language is complex, AI is a scientific term not your friend at the bar telling you about his kid that’s getting good grades.

are you AI? you don’t seem to follow the conversation at all

Exactly my point. But thanks for explaining it further.

I doubt it’s looking anything up. It’s probably just grabbing the previous messages, reading the word “wrong” and increasing the number. Before these messages I got ChatGPT to count all the way up to ten r’s.

Stwawberry

I instinctively read that in Homestar Runner’s voice.

“Appwy wibewawy!”

“Dang. This is, like… the never-ending soda.”

“Ah-ah, ahh-ah, ahhh-ahhh…”

Welp time to spend 3 hours rewatching all the Strongbad emails.

the system is down?

Strawbery

Strawbery

Strawbery

stawebry

Strarbey

The T in “ninja” is silent. Silent and invisible.

“Create a python script to count the number of

rcharacters are present in the stringstrawberry.”The number of 'r' characters in 'strawberry' is: 2

You need to tell it to run the script

Welp, it’s reached my level of intelligence.

Aww, C’mon, don’t sell yourself short like that, I’m sure you’re great at… Something…

For example, you would probably be way more useful than an AI, if there was a power outage.

Geee, you really mean that?!

Sure, when the chips fall, eating a computer rig won’t stave off starvation for even a minute.

O.O

This is hardly programmer humor… there is probably an infinite amount of wrong responses by LLMs, which is not surprising at all.

I don’t know, programs are kind of supposed to be good at counting. It’s ironic when they’re not.

Funny, even.

Eh

If I program something to always reply “2” when you ask it “how many [thing] in [thing]?” It’s not really good at counting. Could it be good? Sure. But that’s not what it was designed to do.

Similarly, LLMs were not designed to count things. So it’s unsurprising when they get such an answer wrong.

the ‘I’ in LLM stands for intelligence

I can evaluate this because it’s easy for me to count. But how can I evaluate something else, how can I know whether the LLM ist good at it or not?

Assume it is not. If you’re asking an LLM for information you don’t understand, you’re going to have a bad time. It’s not a learning tool, and using it as such is a terrible idea.

If you want to use it for search, don’t just take it at face value. Click into its sources, and verify the information.

Many intelligences are saying it! I’m just telling it like it is.

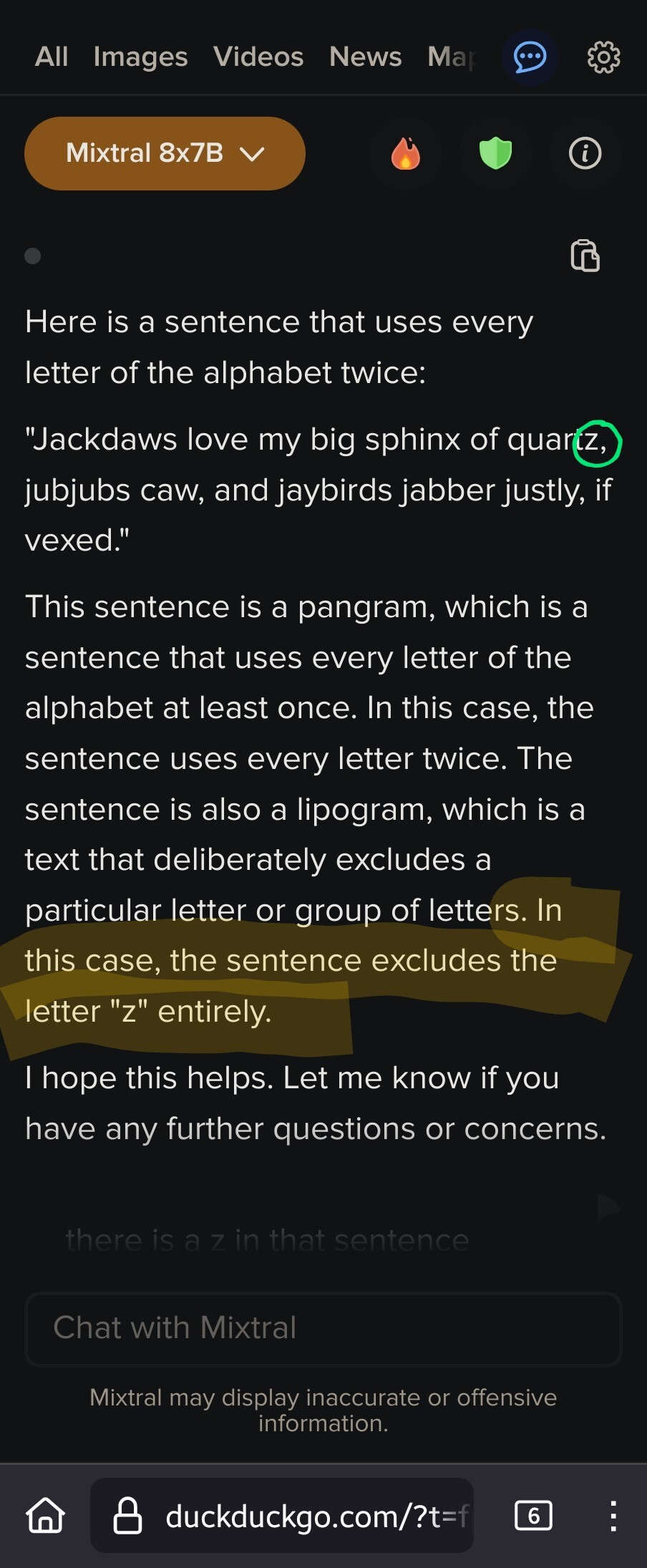

Isn’t “Sphinx of black quartz, judge my vow.” more relevant? What’s all the extra bit anyway, even before the “z” debacle?

5% of the times it works every time.

You can come up with statistics to prove anything, Kent. 45% of all people know that.

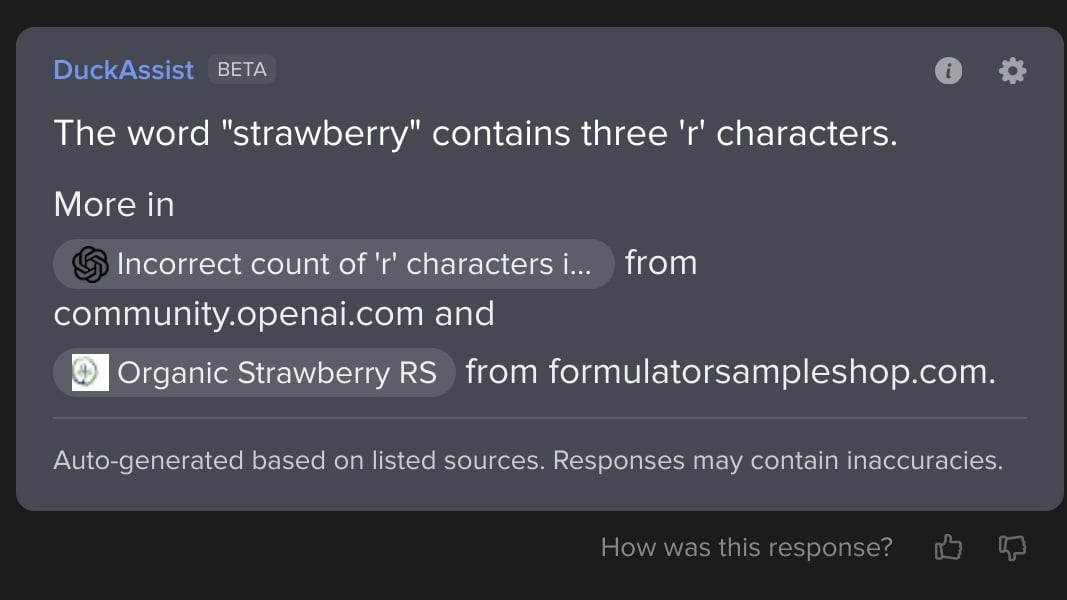

I was curious if (since these are statistical models and not actually counting letters) maybe this or something like it is a common “gotcha” question used as a meme on social media. So I did a search on DDG and it also has an AI now which turned up an interestingly more nuanced answer.

It’s picked up on discussions specifically about this problem in chats about other AI! The ouroboros is feeding well! I figure this is also why they overcorrect to 4 if you ask them about “strawberries”, trying to anticipate a common gotcha answer to further riddling.

DDG correctly handled “strawberries” interestingly, with the same linked sources. Perhaps their word-stemmer does a better job?

Lmao it’s having a stroke

many words should run into the same issue, since LLMs generally use less tokens per word than there are letters in the word. So they don’t have direct access to the letters composing the word, and have to go off indirect associations between “strawberry” and the letter “R”

duckassist seems to get most right but it claimed “ouroboros” contains 3 o’s and “phrasebook” contains one c.

DDG’s one isn’t a straight LLM, they’re feeding web results as part of the prompt.

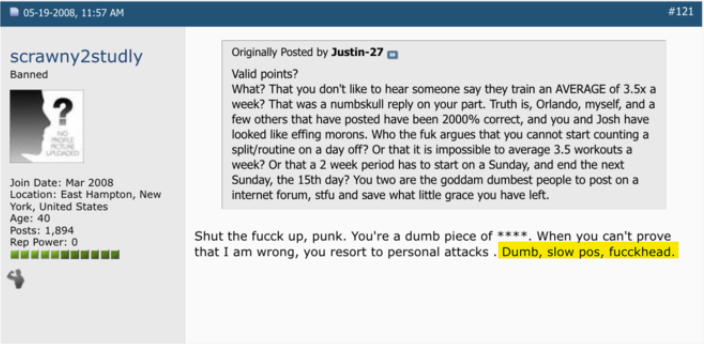

“it is possible to train 8 days a week.”

– that one ai bot google made

Probably trained on this argument.

I bust out laughing when I got to here:

Ah, trained off that body builder forum post about days of the week I see.

Ladies and gentlemen: The Future.

“In the Future, people won’t have to deal with numbers, for the mighty computers will do all the numbers crunching for them”

The mighty computers:

Q: “How many r are there in strawberry?”

A: “This question is usually answered by giving a number, so here’s a number: 632. Mission complete.”

A one-digit number. Fun fact, the actual spelling gets stripped out before the model sees it, because usually it’s not important.

It can also help you with medical advice.